Today Broadcom is launching its next-generation of switch ASICs with the Broadcom Tomahawk 6 series. This is a new 102.4Tbps switch that can handle up to 64 ports of 1.6TbE. Yes, we are now replacing the “Gigabit” with the “Terabit” Ethernet port era. The two new ASICs, the Broadcom BCM78910 and BCM78914 offer two different configurations for different applications.

Broadcom Tomahawk 6 Launched for 1.6TbE Generation

In addition to the higher bandwidth, the new switch chips need SerDes to power the ports and improve load balancing and telemetry. We have Broadcom’s launch deck that generally highlights these points.

The idea behind these new switches is that they can be used to scale up or scale out. Scale up, providing massive bandwidth between a smaller number of nodes is one option. The other is to scale out connecting massive numbers of accelerators and nodes.

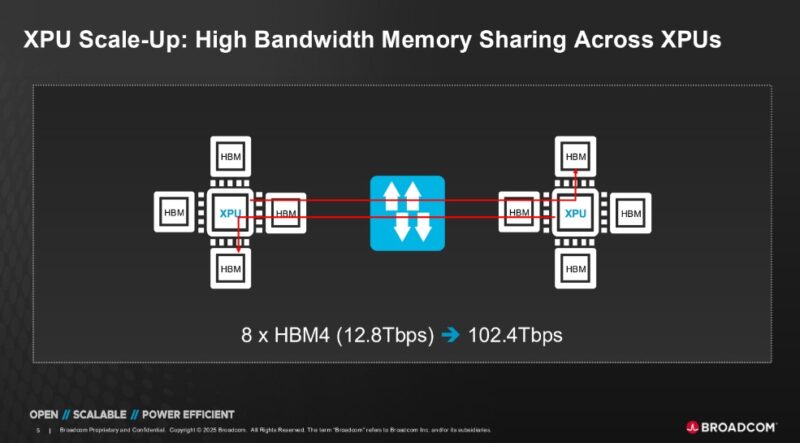

On the scale-up side, here is the scary one showing HBM4 to switch bandwidth.

On the scale-up side there is a need to scale to hundreds of thousands of accelerators and beyond.

One of the neat features of the new chips is the option for co-packaged optics as well as either 512x 200G PAM4, or 1024x 100G PAM4.

Using the 200G PAM4 option, the idea is that one can configure up to 512 XPUs on a single switch. (512 x 200G = 102.4Tbps.)

On the scale-out side, if you were only using 200GbE, that means that you have a bigger radix and thus can use fewer network switch layers.

The advantage of using fewer switches and switch tiers is not the cost per switch. Usually the cost of new switches is higher than older switches. The savings comes from using fewer of them and fewer optics, cables, power, and so forth. It also saves on latency by having fewer hops.

With Tomahawk 6, we get a new Global Load Balancing 2.0.

The new Cognitive Routing helps balance load across the full network path. A simple model here is that as we get more devices operating at high speed, the network needs to get smarter on how it moves data around.

We will let you read through this slide on Cognitive Routing 2.0.

In addition, operators such as cloud providers constantly push for better telemetry.

Broadcom also has a number of options for connecting devices that it had a slide on in the launch deck.

The company makes not just switches, but also NICs and more.

Here is the Scale-Up Ethernet diagram for folks.

Broadcom’s switches and NICs are used all over.

The summary is basically that Tomahawk 6 is a 102.4Tbps switch that supports up to 1.6Tbps links and has different SerDes options.

Along with that there are also several new features to handle the higher speeds.

Final Words

Over the past few weeks I have seen three different Tomahawk 6 switches. These have been listed simply as 102.4T switches before this announcement. For a lot of folks, 102.4Tbps is an arbitrary number. We have seen numbers in the 1200-1700Tbps of global Internet bandwidth in recent years just to put that number into some perspective. To be clear, the move toward UltraEthernet and faster switching is great, as is the ability to scale out to larger network topologies with fewer layers of switching. In a world of growing AI clusters, networking is a significant cost, and consumers a notable amount of power. Broadcom now has the big switch ASIC in the market.

A major challenge Broadcom is facing more generally is that NVIDIA is effectively closing its accelerator ecosystem to non-NVIDIA NICs and PCIe switches. Broadcom also makes many custom XPUs and AI accelerators, so it is a heavyweight in the AI space. It feels a bit like the market is starting to bifurcate.

Hopefully we will get to take apart a Tomahawk 6 switch soon. The last Tomahawk one we did was in the Tomahawk 4 era:

In the 51.2T switch era, we took apart a Marvell Teralynx 10 switch if you want to see inside a current generation switch.

In 10 years, there will be dozens of forum posts on how to mod these with Noctua fans and make them bedroom homelab friendly.

@TurboFEM

Unlikely.

The power and noise of these beasts makes them untenable for anyone but a collector/museum after their DC time is up.

Just look around how many 1st gen 100 GbE switches from 15+ years ago are duking it out in home labs. Rounds to zero.

I tend to agree on this one. 51.2T switches were often 2.4-3kW devices. The trend is total switch power goes up but power per bit goes down.

Perhaps bigger is the delta between high-end and what is available at low power. Companies are investing in the high-end AI switches, but not as much in the lower-power devices that sell new for $1500 and under. PCIe Gen6 servers will be 800Gbps capable, Gen7 will follow soon thereafter at 1.6Tbps. Using 10GbE in the PCIe Gen7 era will be a bigger gap to high-end server networking than using 1GbE as 100GbE started coming out.

Broadcom…how I loathe thee.