Kicking off today is the annual Computex tradeshow in Taiwan. Home to countless system and device manufacturers, Computex is a cornucopia of consumer electronics, and these days is also the biggest PC-centric show of the year. And even though it takes place in May, barely half-way through the year, the show routinely sets the stage for the consumer and server products set to launch later in the year, in the tech industry’s critical third and fourth quarters.

There are several major keynotes during this year’s show. While Intel has passed on hosting a keynote this year – they’re essentially smack-dab in the middle of their product cycles – AMD, Qualcomm, and NVIDIA are all at the show. And, as the largest of the major tech companies at the show, it’s perhaps only fitting that NVIDIA gets to kick things off with the first major keynote.

NVIDIA Computex 2025 Keynote Preview

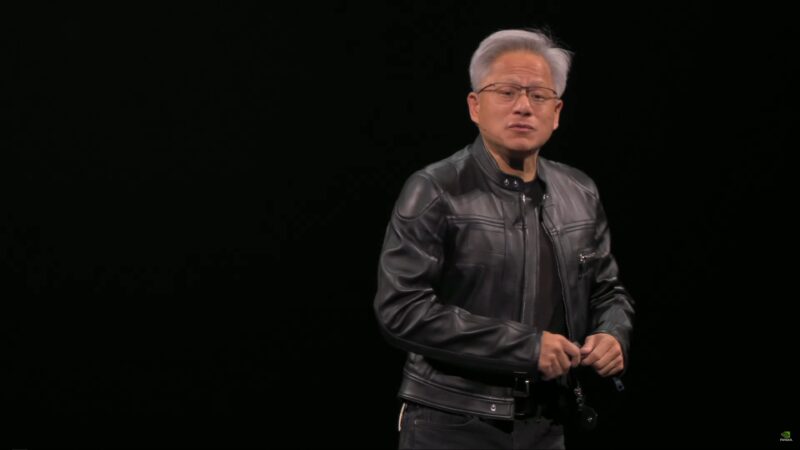

As is normally the case, NVIDIA CEO (and leather jacket enthusiast) Jensen Huang will be headlining the company’s Computex 2025 keynote. NVIDIA’s predominant business segment these days is the data center business – backed by the explosion in demand for hardware for AI training and inference – and it’s AI that NVIDIA will be focusing on for this year’s keynote.

In terms of products, NVIDIA just unloaded a ton of major product announcements two months ago at the company’s exclusive conference, GTC 2025, so don’t expect NVIDIA to have a ton of hardware announcements for Computex. Still, in recent years the company has used Computex to make some more minor hardware announcements, along with updating their customers and partners on the status of their big initiatives. Even with NVIDIA’s increasing vertical integration, the company is still heavily reliant on partners in Taiwan to build and ship systems integrating NVIDIA’s GPUs, CPUs, and networking gear, so a key part of their Computex keynotes is recognizing those partners, and helping to promote them.

We should also expect to see an update on NVIDIA’s various software initiatives. With a lot of NVIDIA’s software development decoupled from hardware development, the company can (and does) launch new software packages and services year-round, so Computex is as good a place as any to show off some of their latest software.

NVIDIA’s keynote is scheduled to run for 90 minutes, and will kick off at 8pm PT/11pm ET/11am CST/03:00 UTC.

NVIDIA Computex 2025 Keynote Coverage Live

At this point we’re just waiting for NVIDIA’s keynote to kick off. Everyone is getting seated, and NVIDIA is playing their holding loop.

And here we go!

This year’s opening video: This is how intelligence is made.

NVIDIA is continuing their theme/strategy from GTC of pushing the concept of token generation as the fundamental unit of AI computing. And thus all the things they enable via AI.

And here’s Jensen!

(Jensen’s parents are apparently in the audience; he’s giving them a shout-out)

NVIDIA is a regular Computex attendee, never mind having significant roots with other companies in the country. So Jensen likes to talk about those roots at the show.

“We are at the epicenter of the computer ecosystem”

“When new markets have to be created, we have to create them here”

“I promise I’ll talk about AI. And we’ll talk about robotics”

“The NVIDIA story is the story of the reinvention of the computing industry”

NV was a chip company that wanted to create a platform. And they’ve essentially done that with CUDA. And they’re grown out farther and farther from there with whole systems (DGX), and eventually whole racks (NVL72).

NVIDIA’s success in the AI field, besides making fast chips, has been leveraging their chip-to-chip networking technologies. Making it possible to scale up systems large enough to handle the compute-intensive tasks that AI processing requires.

“The modern computer is the entire datacenter”

“No technology company in history has revealed a roadmap for 5 years ahead in time”

“NVIDIA is not only a technology company anymore. It is an essential infrastructure company”

And back to the classic industrial revolution comparisons. Talking about the need to build out infrastructure all across the world. In the old age it was electricity; in Jensen’s age, it’s intelligence. In 10 years time, AI will be integrated into everything.

AI facilities are not classic data centers. AI facilities are factories.

And here’s the segue to tokens. In Jensen’s worldview, AI factories produce tokens, just like a factory produces physical goods.

Jensen expects this AI factory industry to eventually amount to trillions of dollars.

“We’re the only technology company in the world that talks about libraries non-stop”

And Jensen is going to show us a few new libraries today.

But first, here’s a preview of what NVIDIA is going to be showing today.

And that was a montage of various projects, technologies, and products that NVIDIA’s partners have been using their technologies to create.

Now on to some hardware.

GeForce RTX 5060 and a new MSI laptop with an integrated laptop RTX 5060.

Jensen is demoing AI upscaling (DLSS) to cut down on rendering workloads.

“Ladies and gentlemen: GeForce”

“Now 90% of our keynotes aren’t about GeForce. But it’s not because we don’t love GeForce. RTX 50 series just had its most successful launch ever; our fastest launch in our history”

And that was GeForce. Now on to libraries.

Talking about libraries, and how naive hardware acceleration can only go so far. The better applications can be optimized for hardware acceleration – the better developers can tell the hardware about what’s going on – the more they can benefit from acceleration.

These days NVIDIA has many, many CUDA-X libraries. Weather, quantum computing, physics, medical, computational lithography, and of course, deep learning.

These libraries, in turn, open up markets to NVIDIA.

And NVIDIA wants to get into the telecom industry in a big way.

Multiple partners are doing 6G technology trials that incorporate NVIDIA AI hardware.

And, of course, agentic AI. Which has been another huge push from NVIDIA in the past year. AI models/agents that specialize in different subjects/tasks, and then linked back together. Reasoning models, chain-of-thought, mixture of experts. Understand, think, and act.

Understand, think, and act is also the robot loop. Agentic AI is basically software robots.

Which segues into physical robotics. Robotics AI needs to be able to reason about physical cause and effect and object permanence.

And now on to Grace Blackwell.

“Scaling up is incredibly hard. […] But that’s what Grace Blackwell does”

Grace Blackwell is in full production; Blackwell has been in full production as HGX systems since last year. In Q3 of 2025, NVIDIA will increase the performance of the platform with the previously-announced GB300 hardware

Grace Blackwell with a faster Blackwell chip with more performance and more memory.

Jensen is comparing GB300 to the 2018 Sierra Supercomputer, which was based on Volta (I presume he’s comparing AI performance to Volta’s total performance)

1 NVLink Spine moves more traffic than the entire Internet (112.5TB/sec).

“This is as far as any SerDes is ever driven”

And that limit is why NVIDIA put everything into one rack – and why they went with liquid cooling. Distance is at a premium.

“Once we scale it up, then we can scale it out into large systems”

Showing some photos of AI data centers/factories. The racks have to be placed apart in some cases due to the power density. Over 100KW/rack.

And to further his AI factory, comparing Oracle’s Stargate project build-out to building a traditional factory.

Now rolling a short video on Blackwell.

This is a good video. Though it’s moving far too fast to capture all of the good fabbing shots!

“We couldn’t be prouder of what we achieved together”

(I think I saw a shot in there from Patrick’s Dell tour from last week?!)

NVIDIA is also going to be building an AI supercomputer for Taiwan in conjunction with Foxconn, NSTC, and TSMC.

“All of that is so we can build a very large chip”

“This is a massive industrial investment”

New announcement: NVLink Fusion.

NVLink Fusion will allow vendors to build semi-custom infrastructure incorporating NVLink. You won’t be stuck with just an NVIDIA accelerator if you want NVLink.

NVLink Fusion will allow third-party accelerators to be augmented with NVLink capabilities by using a NVLink chiplet that connects to the accelerator. Along with IP that needs to go into the ASIC itself to connect to the NVLInk chiplet, and to support NVLink C2C to connect back to an NVIDIA CPU.

Custom CPUs are also on the table, so long as they implement NVLink C2C, so that they can connect to NVIDIA’s GPUs. NVIDIA previously announced NVLink C2C licensing, and the CPU use cases for NVLink Fusion are the extension to that program.

Qualcomm and Fujisu are doing CPUs. Marvell, Mediatek, AsteraLabs, and Alchip are doing accelerators. NVIDIA is basically looking to leverage its experience and technology for scale-up computing, which the company considers the harder task.

Now on to smaller systems.

DGX Spark is in full production and will be available in a few weeks. This is NVIDIA’s previously-announced Project DIGITS box. The small-stature box is meant for prototyping within the NVIDIA ecosystem.

NVIDIA’s OEM partners will also be making similar systems using NVIDIA’s chips.

“One thing for sure: everybody can have one for Christmas”

OEMs will also be building more powerful DGX Station systems (previously announced at GTC 2025)

DGX Station is about as powerful as can be fed by a standard electrical outlet. (In the USA that’s normally ~1.5KW)

Now transitioning back to software.

Jensen sees agentic AIs as the solution to growing labor shortages.

IT will become the HR of digital workers. Which means NVIDIA needs to create the necessary tools to manage those AI agents.

“But first, we have to reinvent computing.”

Now rolling another video about RTX Pro-based servers and server racks.

Announcing RTX Pro Server.

Enterprise and Omniverse server. x86-based with RTX Pro PCIe cards.

This appears to be the successor to NVIDIA’s PCIe-based accelerators. No GB200 GPU-based PCie card has been announced. Instead, NVIDIA is going to lean heavily on RTX Pro 6000 cards, which are based on the GB202 GPU.

And in RTX Pro servers, the GPUs will also have direct network access so that they can all be connected to each other in other servers.

This is essentially NVIDIA’s solution for workloads that need graphics capabilities (and to a lesser extent, traditional rackmount servers with x86 compatibility). GB200 has very limited graphics capabilities, by comparison.

“If you’re building enterprise AI, we now have a great server for you”

“Whether it’s x86 or AI, it just runs”

“This is likely the largest go-to market of any system we have taken to market.”

Shifting gears again, this time to storage.

NVIDIA AI Query: AI-Q.

GPUs sitting on top of storage.

Jensen is talking up NVIDIA’s model performance, particularly with models they’ve post-trained.

Now rolling a short video about how partner Vast is using NVIDIA’s AI infrastructure and various models.

In the future, IT will have AI Operations.

CrowdStrike is one of many partners working with NVIDIA.

Now shifting gears once more, this time to robotics.

Jensen is recapping NVIDIA’s Newton physics engine, which was a joint project with Disney and DeepMind, and was previously shown off at GTC 2025.

Rolling a video (I think this may be the same or similar to the video they used at GTC?)

A simulation video, not an animation video. Demonstrating the physics simulation of rigid and soft-body physics.

And shifting gears, a bit more literally this time, on to the subject of automotive electronics.

“I love it if you buy everything from me. But please buy something from me”

Isaac Gr00t is substantially built from hardware that is also used for NVIDIA’s automotive hardware, such as the (Jetson) Thor SoC.

The Isaac Gr00t N1.5 model is being released, and is open-source.

Also announcing Isaac Gr00t-Dreams

Using AI to amplify human demonstration systems. Using AI to reduce the amount of human work needed to train AI system.

Rolling another video.

Prompt the AI to try things based on words, which is then simulated by Cosmos, rather than needing a human to demonstrate the specific action.

It’s certainly ambitious, if not a bit audacious.

Human robotics is so important because they can fit into the existing world.

Jensen thinks that humanoid robotics will one day be a trillion dollar industry (and he wants a piece of it for NVIDIA).

“The factories are also robotic”

So it’s robots all the way down.

And here comes the plug for Omniverse. Making a digital twin of the factory to use to train the robots that work the factory.

TSMC is even building a digital twin of their next fab (fab piping is ridiculously complex, especially when you want to make changes).

Rolling another video on “software-defined manufacturing” in Taiwan.

The age of industrial AI is here. Powered by Omniverse.

“It stands to reason that this is an extraordinary opportunity for Taiwan.”

“It stands to reason that AI and robotics will transform everything we do.”

“GeForce brought AI to the world. Then AI came back and transformed GeForce.”

“It’s been a great pleasure to work with you. Thank you.”

Now rolling a video of a flying NVIDIA office building?

NVIDIA Constellation.

NVIDIA is growing beyond the limits of its current office in Taiwan. So NVIDIA is building a new Taiwanese office. One of the largest products that NVIIDA has (technically) ever built.

And a site has been selected and NVIDIA has acquired the lease for the land. NVIDIA is going to start building it as soon as they can.

Jensen is now wrapping up his keynote.

“I look forward to partnering with all of you.”

And that’s a wrap! Be sure to join us at 11pm Pacific/2am Eastern/06:00 UTC for the next keynote of the day: Qualcomm.

Wow! I made a Ryan Smith live blog! Great stuff Ryan

nice rolling commentary!

Duuuuude can you spot out and grab some pics and specs of 8 socket granite rapids boxes on display? I have a hana DB in need of upgrades…